In the rapidly evolving field of artificial intelligence, optimizing the performance of Large Language Models (LLMs) is paramount. This edition explores three advanced techniques—Retrieval-Augmented Generation (RAG), Prompt Engineering, and Fine-Tuning—that significantly enhance LLM responses.

1. Retrieval-Augmented Generation (RAG): Integrating External Knowledge

Retrieval-Augmented Generation (RAG) is a technique that enhances LLMs by integrating external information retrieval capabilities. Unlike traditional models that rely solely on pre-existing knowledge, RAG-equipped models can access and incorporate up-to-date, domain-specific information during response generation. This process involves retrieving relevant documents based on user queries and using this data to augment the model's responses, thereby improving accuracy and reducing instances of hallucination.

2. Prompt Engineering: Crafting Effective Inputs

When working with AI language models, how you ask questions matters tremendously. Prompt engineering is about crafting your requests in ways that get you the best possible answers.

Think of it like learning to communicate effectively with a brilliant but very literal assistant:

Zero-Shot Approach: Just ask your question directly without examples. This works when your request is straightforward: "Summarize this article" or "Explain quantum computing."

One-Shot Approach: Show the AI one example of what you want. It's like saying, "I'd like something similar to this..." This helps when you need a specific format or style.

Few-Shot Approach: Provide multiple examples to establish a clear pattern. This is particularly useful for complex or nuanced requests where you need the AI to recognize specific patterns.

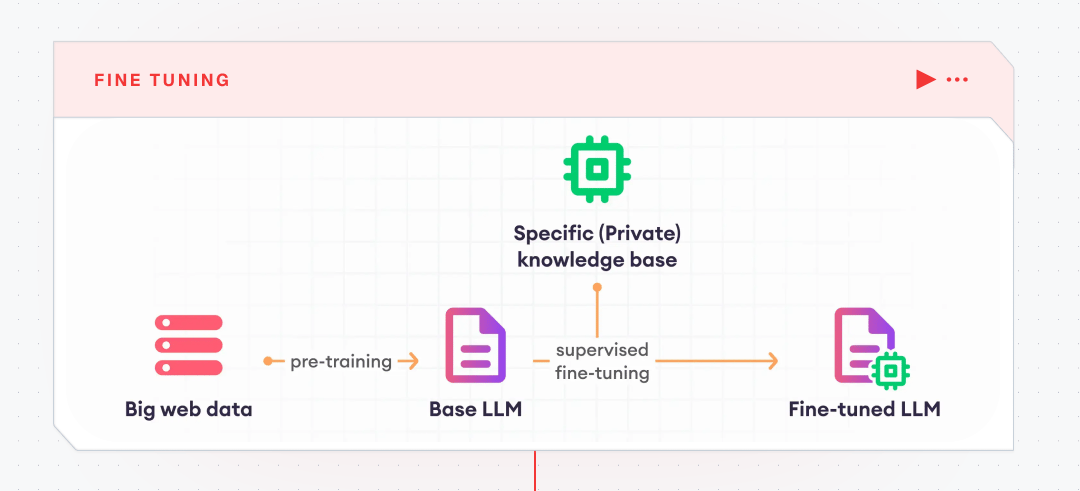

3. Fine-Tuning: Customizing Model Behavior

Fine-tuning transforms a pre-trained language model into a tool that better understands your specific needs. By training the model on your own specialized dataset, you can teach it to recognise the unique terminology, context, and response patterns that matter in your field.

Launch Week #2

We’ve been working on the next evolution of AI workflows at Lamatic..

A new interface, enhanced DevX, collaboration tools, and more – all launching March 24-28!

Follow us on Product Hunt to be the first to see it! 🔥

More info: Click here

Continue Learning with these resources

Stay ahead with the latest AI advancements—dive in today!