Large language models (LLMs) like GPT or Claude have revolutionized NLP by training on massive text corpora to predict and generate human-like text. They learn deep statistical patterns and semantics (via self-supervised learning) but do not store up-to-the-minute facts or have real-time access to your data.

In other words, an LLM’s “knowledge” is fixed at training time, it can’t on its own access or query your internal databases, documents, or systems.

The model generates output via next-token prediction over its learned parameters, without access to real-time or external data sources.

“LLMs are great at language accessing proprietary, dynamic, or real-time data”

Some people argue that this problem can be solved by simply putting all documents directly into the LLM prompt. In reality, that approach breaks down quickly.

Why “just prompting” doesn’t scale:

Fixed context limits: LLMs have a maximum context window (only a few thousand tokens for older models, and ~100k even for newer ones). Anything beyond that gets truncated or ignored.

Information overload: Packing too much data into a prompt increases the chances of hallucinations or missing critical details.

Difficult to debug: Large, complex prompts are harder to maintain, harder to debug, and lead to inconsistent behavior across queries.

Inconsistent results: Long, complex prompts tend to produce higher error rates and less reliable outputs over time.

In short, Prompts are great for shaping behavior, but they are not a scalable way to supply data. To build reliable systems, LLMs need a mechanism to access and ground themselves in relevant external data beyond the prompt.

So how do we ground LLMs with relevant information?

To ground LLMs we need to add a retrieval layer that fetches the exact information the model needs to generate an answer. That’s where RAG comes in.

What is Retrieval-Augmented Generation (RAG)?

Retrieval-Augmented Generation (RAG) adds a retrieval step to large language models. Instead of relying only on what the model already knows, RAG first pulls relevant information from external sources — such as documents, databases, or APIs — based on the user’s question. This information is then passed to the model as context, allowing it to generate more accurate and up-to-date responses.

In the original RAG paper, it is described as a way to connect any LLM to any knowledge source without fine0tuning them.

Typical RAG Workflow

The RAG workflow typically works in three steps:

The user query triggers a retrieval of top-matching documents or data (semantic search)

Those results are combined into an augmented prompt

LLM then generates a response using both the original query and this retrieved context.

In other words:

“User Query + Retrieved Context ⇒ LLM”

This grounded prompt dramatically reduces hallucinations and other issues related to discussed above.

How RAG Works: Embeddings and Vector Search

Semantic Search is the heart of RAG. To find relevant info:

We first need to convert text into vector embeddings (a high-dimensional numerical representations that encode meaning). Intuitively, similar texts map to nearby vectors. For instance, phrases like “refund policy” and “return rules” would have close vectors. We can generate embedding of text using pre-trained embedding models (e.g. a BERT or OpenAI embedding model).

A vector database then stores these embeddings.

When a user asks a question, the query is also embedded into this semantic space.

The system computes the nearest neighbors (e.g. by cosine similarity or L2 distance) in the vector DB to retrieve the top-k most relevant chunks.

“Vector Search matches on semantic meaning, not exact keywords”

For example, a user query about “stop a container” can return results about “terminate instance” because their vectors are similar.

This semantic retrieval works even when wording differs, making RAG far more flexible than keyword search alone.

End-to-End RAG Pipelines

RAG consists of two pipelines:

Indexing Pipeline

The indexing pipeline is responsible for transforming raw external data and storing it in a vector database.

A typical indexing pipeline includes the following steps:

Extract Data from External Sources: Data is collected from multiple sources such as PDFs, Word documents, databases, APIs, web pages, internal tools (e.g., Notion), or data lakes.

Data Processing: Once data is extracted, it is normalized into a common text format, Cleaned to remove noise headers, footers, boilerplate text) and duplicates then enriched with metadata (source, timestamps, permissions, tags) to support filtering and access control during retrieval

Chunking: Large documents are split into smaller, semantically meaningful chunks to improve retrieval accuracy and reduce context loss.

Embedding Generation: Each chunk is converted into a vectors using an embedding model.

Vector Storage: The embeddings, along with their metadata, are stored in a vector database to enable fast similarity search.

Query Pipeline

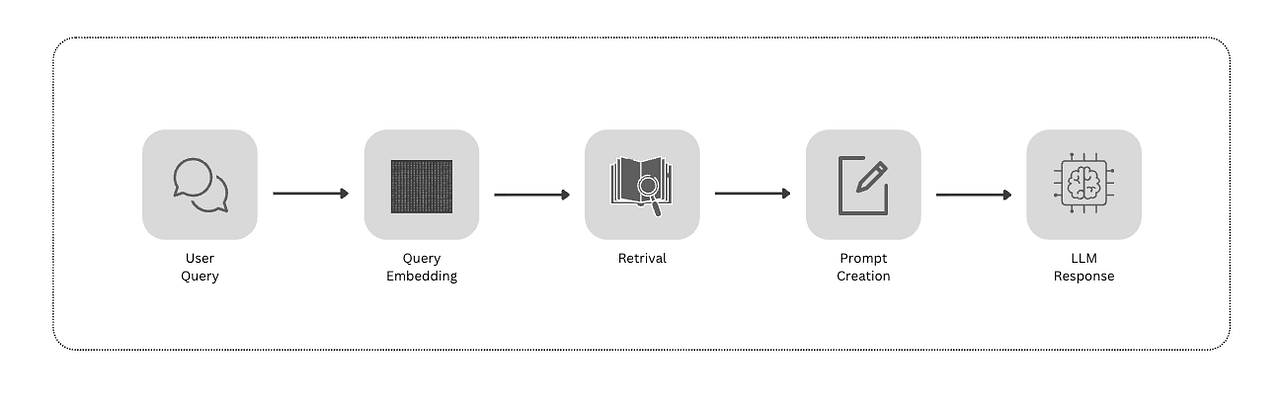

The query pipeline processes user questions and generates responses. It typically follows these steps:

User Query: The user submits a question in natural language.

Query Embedding: The query is converted into a vector using the same embedding model used during indexing.

Retrieval: The query vector is used to retrieve the most relevant document chunks from the vector database using nearest-neighbor search.

Prompt Construction: The retrieved text is combined with the original user query to form an augmented prompt.

LLM Response: This augmented prompt is sent to the LLM, which generates an answer.

These pipelines essentially treats the LLM like a completion engine over your data.

In short RAG:

“Crafts an enriched prompt by augmenting the user’s original prompt with additional contextual information — and passes it on as input to the LLMs”

Implementing RAG with Lamatic.ai

In this guide, we’ll walk through how to build a Retrieval-Augmented Generation (RAG) pipeline using Lamatic.ai. As part of this walkthrough, we’ll create a chatbot capable of answering user queries based on documents stored in Google Drive.

Step 1: Create a Lamatic Project

Before getting started, you’ll need to create an account and set up a project in Lamatic.

Sign up to Lamatic.ai and access the Studio: https://studio.lamatic.ai/signup

Create a new organization and set up a project within it.

Step 2: Build the Indexing Pipeline

Once your project is ready, the next step is to create the indexing pipeline. This pipeline will ingest documents from Google Drive, process them, and store their embeddings for retrieval.

Step 3: Build the Query Pipeline

After the indexing pipeline is set up, we’ll move on to creating the query pipeline. This pipeline will handle user questions, retrieve the most relevant context from the indexed data, and generate accurate responses using an LLM.

👇🏻 Watch this recording to see how you can build a production-ready RAG pipeline in under 10 minutes

Common RAG Pitfalls and Limits

While powerful, RAG has its own failure modes.

Garbage in, garbage out — if retrieval goes wrong, generation will too.

Common mistakes include:

Poor chunking: Breaking documents into chunks is an art. Too-large chunks may mix unrelated info; too-small chunks can lose context. When chunks aren’t aligned with logical or semantic boundaries, retrieval quality drops significantly.

Retrieving too much or too little: Fetching too many chunks can exceed the LLM’s context window. Fetching too few risks missing key facts. Getting the “top-k” right (and possibly re-ranking) is crucial.

Ignoring latency: RAG systems introduce additional retrieval steps before generation. As document stores grow or queries become more complex, these lookups can increase response times. For real-time applications, this added latency must be carefully optimized or masked to maintain a smooth user experience.

Blind trust in outputs: Even with retrieved data, the LLM can misinterpret or hallucinate based on that context. RAG reduces hallucinations but doesn’t eliminate them. Each answer should still be verified (e.g. with citations or human review) to optimize the system.

When RAG Falls Short

Complex reasoning across many documents: RAG struggles when answers require stitching together insights from a large set of documents with deep cross-references.

Missing or stale data: Retrieval can only surface what exists; outdated, incomplete, or incorrect data leads to unreliable outputs.

Multi-step workflows: Tasks that require planning, state, or iterative reasoning across steps often exceed what simple retrieve-then-generate can handle.

High-precision requirements: In domains like legal or medical systems, even small inaccuracies are unacceptable, limiting RAG’s reliability.

What to Do Instead (or Alongside RAG)

To build robust AI systems, RAG can be one component of a larger architecture. For example:

Agentic workflows (Tools): Rather than relying on a single monolithic prompt, build agents that can dynamically invoke external APIs or “tools” (including vector databases) at inference time.

Deterministic Systems + LLMs: Use rule-based or programmatic logic for tasks that require certain results such as validation, calculations, and strict comparisons, while leveraging LLMs for natural language understanding and generation. Critical operations like exact date checks or sensitive decision rules should never depend solely on LLMs.

Human-in-the-loop: For high-stakes or ambiguous scenarios, a human should validate or make the final decision. LLMs often “flag” uncertainties for human review, much like cruise-control in a car: the system handles routine driving, but a human steps in when conditions become complex or unsafe. This hybrid approach combines the speed and scale of machines with humans’ judgment.

Hybrid search: Many modern RAG systems combine keyword-based search with semantic (vector) search. This hybrid approach improves both accuracy and robustness by capturing exact term matches as well as semantic relevance. In practice, a fast BM25-based retriever is often used alongside a vector database, with results merged to provide stronger grounding for the LLM.

Key Takeaways

Prompts can’t do everything: Even the best prompt engineering hits a ceiling. LLMs don’t have access to real-time or proprietary data, and without guidance, they’re prone to hallucinations.

RAG hooks models with real data: RAG works by pulling in fresh, relevant context before generation — leading to responses that are more accurate, grounded, and useful.

Data beats clever prompting: A RAG system is only as strong as the data behind it. Well-curated sources, clean structure, and smart chunking matter far more than longer or more complex prompts.

Design systems, not prompt tricks: Move beyond one-off hacks. Combine retrieval, agents, APIs, hybrid search, and even human-in-the-loop workflows. A well-engineered architecture beats endlessly tweaking prompts.

In summary, an LLMs should be treated as just one component of a larger system. Strong engineering — spanning data pipelines, retrieval, fallbacks, and monitoring — will consistently outperform endless prompt tweaking.

“Great AI products are engineered, not merely prompted”

Thanks for reading! 🙌

If you’ve made it this far, you already know the core message: prompting alone won’t get you to production-grade AI.

If this article resonated, don’t stop at theory. The teams that win in 2026 will be the ones turning LLMs into grounded, reliable, measurable systems — not just impressive chat interfaces.