In every major technology shift, there comes a moment when excitement runs ahead of reality. For AI, that moment arrived in 2024–2025.

Capital flooded in, valuations exploded, and “AI-first” became the default pitch rather than a meaningful distinction. The question many are now asking is simple:

Are we in an AI bubble — or witnessing the early stages of a structural transformation?

The honest answer is: both.

Why the AI Bubble Is Real

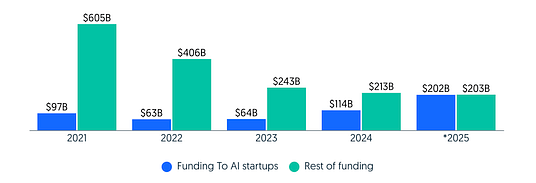

2025 made one thing painfully obvious: capital poured into AI at a rate that stretched markets and expectations.

Massive capital concentration: By late 2025, startups labelled “AI” had attracted roughly $200 billion in venture funding — more than any other sector. In Q3 2025 alone, AI deals accounted for 53% of VC dollars, and a very large share of that money went to a handful of “megadeals” (Anthropic raising ~$13B, xAI ~$10B).

Fewer bets, bigger bets: While total dollars remained high, deal counts fell — roughly 44% from early 2022 to late 2025 — meaning the market funneled most funding into a small number of large players while starving smaller teams.

Valuations at bubble heights: Tech indices and major market concentrations (the so-called “Magnificent Seven” era) pushed valuations into territory historically associated with bubble peaks. When a third of the market’s value rests on a handful of players, sentiment becomes fragile.

Business reality lagging rhetoric: Corporate pilots and POCs frequently failed to translate into production value: reported AI pilot failure rates ranged from 75% to 95%, often due to overambitious scope, poor integration, and absent ROI. Analyst firms warned that only a small fraction of vendors were truly capable of delivering operational AI at scale.

Put together, those items are the textbook signs of a speculative bubble:

Exuberant money, concentration in a few headline names, and a wide gulf between expectations and delivered value.

Why asking “Is AI a bubble?” misses the main question

That simple yes-or-no framing is tempting — but it misses the point.

The real question is: Which parts of the AI ecosystem are inflated by hype, and which parts are becoming foundational?

Think back to the dot-com era. Yes, many companies that rode the hype collapsed. Yes, valuations reset. But the internet itself was not a mirage — it was foundation that rewrote commerce, communication, and media.

AI in 2025–26 looks similar: some categories are dangerously overheated while the underlying technologies and economics are evolving in durable ways. So:

Yes — speculative behavior will correct. Some startups will fail, hiring and investment will re-center around durable business models, and parts of the market will consolidate. Price discovery is overdue.

No — the field is not merely a fad. Model and systems advances, falling deployment costs, a shift toward multimodality, and deeper embedding of AI in workflows create a long-term structural shift.

The likely path forward (a pragmatic roadmap)

So what should we expect going forward? A hybrid outcome: short-term market correction, but steady and meaningful technological progress underneath.

Consolidation among startups and vendors. Capital constrained to the largest, most credible players means smaller outfits either get acquired, pivot to niche use cases, or fold. The market will sort vendors by real engineering capability and product-market fit.

Rationalization of infrastructure economics. As competition among model and infra providers intensifies, pricing models will mature (and likely fall), SLAs will become more meaningful, and opaque “model as commodity” claims will be tested against real Total Cost of Ownerships (TCOs)

From experiments to systems. The emphasis will shift from isolated model-releases and flashy demos to systems thinking: integration, observability, data quality, compliance, and lifecycle engineering.

Winners built on fundamentals. Firms that make AI core to how they build products and run their operations — not as one-off projects — will capture sustained advantage.

What survival looks like for early-stage companies and builders

If you’re building in 2026, surviving a market correction is a short-term goal. Winning requires long-term strategy. The teams that make it will focus on three core areas:

1. Real business metrics (not hype)

Unit economics: Know exactly what each unit of value costs you — cost per converted user, cost per automated ticket resolved, cost per workflow run — and keep optimizing it.

Payback and ROI: Be clear about when an AI feature pays for itself. If costs outweigh returns for too long, narrow the scope or rethink the feature.

Distribution and retention: Even the best model is useless without users. AI capability must be tightly connected to growth, adoption, and retention.

2. Operational discipline

Integration, not isolation: Ship AI features that fit naturally into how users already work. Demos that need manual cleanup or custom infrastructure won’t scale.

Observability and measurement: Track everything. Track latency, drift, failure modes, per-customer performance, and economic impact. Treat models like critical production services.

Iteration & feedback loops: deploy small, measurable experiments to learn quickly rather than big-bang launches that fail silently.

3. Defensibility beyond model size

Proprietary data and workflows: Data created through everyday product usage often becomes the strongest long-term advantage.

Vertical focus: Deep domain knowledge turns general AI into real differentiation. Industry-specific prompts, datasets, and workflows really matter.

Integration depth: The more deeply you’re embedded in a customers’ stacks (APIs, UI/UX, identity, billing) the harder you are to replace.

Practical checklist for founders and product teams (quick, actionable)

Know your unit economics: Do you know the real cost of one AI request (compute + storage + infra) and the value or revenue it creates? If not, start here.

Prove impact fast: Can you show that this AI feature reduces friction or increases revenue for real users within 90 days? If you can’t show measurable impact quickly, you need to shrink scope.

Make metrics visible and owned: Are your metrics visible to the CEO and to at least one dedicated engineer who owns model performance? No visibility means no accountability.

Plan for failure: Do you have rollback plans and runbooks for outages, hallucinations, or data drift? If not, build them now — before users feel the pain.

Treat data like infrastructure: Is your data pipeline repeatable, measurable, and cost-tracked? One-off labeling and ad-hoc features don’t scale and will break later.

What investors and policymakers should watch

Investor focus: discriminate between capital-intensive platform and high-leverage product companies. Look for revenue expansion driven by operational metrics (ARPU, retention), not merely new model announcements.

Policy focus: Regulators should watch concentration risks (market power, data monopolies) and systemic dependencies (critical infrastructure tied to a small number of model providers).

Macro watchers: if AI-driven spending added >1% to GDP in early 2025 (a plausible estimate based on industry reports), then a meaningful slowdown in AI investment could have macro effects — another reason to differentiate hype from durable activity.

So… Bubble or Breakthrough?

Calling AI “a bubble” is a useful shorthand for diagnosing speculative excess, but it misses the fuller picture. The more accurate view is pragmatic and two-speed.

Short Term: Expect a messy but necessary correction. Capital will refocus, valuations will reset, and weaker, hype-driven bets will fall away. This phase will be painful for many — but healthy for the ecosystem. It clears noise, forces accountability, and exposes which teams were building stories versus systems.

Mid to Long Term: AI doesn’t disappear — it settles. It becomes infrastructure: pervasive, embedded, and essential in some domains, while commoditized and low-margin in others. Real economic value will concentrate where AI is tightly coupled to customer workflows, proprietary data, and distribution — not where the models are merely the largest or the newest.

So is AI a bubble or a breakthrough? The honest answer is both — and incomplete on its own. Speculation ran ahead of execution, but execution is now catching up. 2026 will mark a clear transition:

Model obsession → system reliability

Demos → deployments

Hype → operating discipline

For builders, the prescription is clear. Stop selling the promise and start engineering the proof. Do the unglamorous work: unit economics, integration, measurement, iteration. Treat AI as infrastructure, not magic.

The companies that survive won’t be the loudest or the most funded. They’ll be the ones that build quietly, operate rigorously, and earn trust through results. That’s how real technology revolutions mature — not with a bang, but with a hard reset and a sharper focus.

Where Lamatic Fits in the Post-Bubble AI Era

If 2025 exposed the limits of model-centric thinking, 2026 will reward teams that master AI as a system.

This is precisely the gap Lamatic is built to address.

Most organizations don’t fail at AI because models are weak — they fail because production AI systems are hard to design, test, and operate. Agent workflows need memory, branching logic, retries, observability, and governance. Without these foundations, even the most powerful models degrade quickly in real-world environments.

Lamatic approaches AI from the opposite direction: not as a prompt playground, but as engineering infrastructure. We help teams:

Design multi-step agent workflows visually and programmatically

Add state, control flow, and tool orchestration without custom glue code

Test and debug agent behavior before deploying to production

Monitor execution, costs, and failures in live systems

Build AI applications that are reproducible, auditable, and scalable

Don’t just take my word for it. Build your first workflow today.

In a market moving from experimentation to execution, this shift matters. As enterprises consolidate AI efforts in 2026, they will standardize on platforms that reduce operational risk and increase developer velocity — not ones that simply expose another model endpoint.

The AI bubble will deflate hype.

elevate infrastructure

Lamatic sits squarely in that second category: enabling teams to move beyond demos and build AI systems that actually survive contact with reality.

In the post-bubble era, the winners won’t be defined by how intelligent their models are — but by how reliably their systems run.