Remember the drawer full of tangled cables you used to have? A different charger for your phone, another for your laptop, a proprietary connector for your camera. It was chaotic, expensive, and frustrating.

Source: Tenor

Then came USB-C. Suddenly, one standard port could handle power, data, and video across almost every device. It just worked.

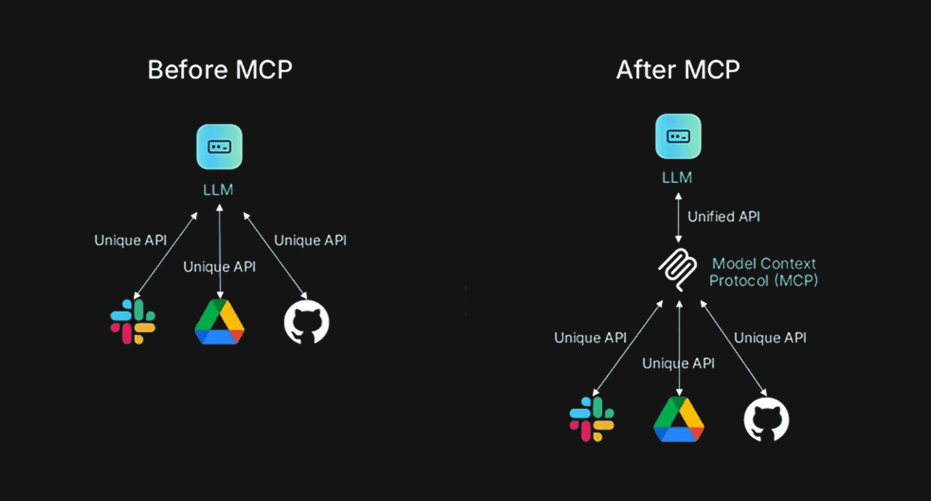

AI development is currently stuck in that "drawer full of cables" phase. We are building incredible models, but connecting them to the real world, your databases, calendars, and crucial business tools is a messy tangle of custom integrations.

Enter the Model Context Protocol (MCP). Introduced by Anthropic, MCP is poised to be the "USB-C moment" for the AI industry, and for SaaS founders, it changes everything.

Why Building AI Apps is So Slow

Before MCP, if you wanted your AI application to read a user's Google Calendar, check a Jira ticket, or query your internal PostgreSQL database, you had to build a bespoke bridge.

You wrote custom code to authenticate against an API, wrangled data into a format the LLM could understand, and then wrote more code to interpret the LLM’s response and turn it back into an API action.

For every new integration, you had to rebuild that bridge from scratch. It’s brittle, time-consuming, and constitutes "glue code" necessary work that adds zero unique feature value to your product.

The Solution: The Universal AI Port

MCP acts like a universal "USB port" for AI models. It establishes a standardized language for how AI models act as clients and how data sources act as servers.

The Client (The AI): Speaks the standard MCP language.

The Server (Your Data/Tools): Speaks the exact same language.

Because they share a protocol, they can connect instantly. You no longer need to teach the AI how to talk to Google Maps; you just plug the Google Maps MCP server into the AI client, and they immediately understand each other's capabilities.

What is an MCP Server?

An MCP Server is simply a small application designed to expose specific capabilities to an AI in that standardized format. Think of it as a specialized adapter that translates your tool's native language into "MCP-speak."

Source: Composio

Examples of what MCP servers unlock:

GitHub MCP Server: Allows the AI to independently read issue threads, summarize pull requests, or even push code.

PostgreSQL MCP Server: Gives the AI safe, structured access to query your database to answer user questions about their data.

Google Maps MCP Server: Enables the AI to calculate travel times, find nearby businesses, or geolocate addresses.

The Core Concepts

An MCP server isn't just a data dump. It exposes three distinct types of capabilities that define how the AI interacts with the world:

Resources (Read-Only Data): Think of this as giving the AI access to a library. It could be a local file, a specific database table, or API documentation. The AI can read it to gain context but cannot change it.

Tools (Executable Functions): This is the most common and powerful use case. Tools are the "hands" of the AI. They are executable functions like "Send an Email," "Clear Cache," "Update CRM Record," or "Run Currency Conversion."

Prompts (Pre-defined Templates): These act as guidebooks. Prompts help the AI understand the best way to interact with the data or tools provided by the server, ensuring reliable and safe interactions.

Why SaaS Founders Need to Care Right Now

If you are building an AI-native SaaS product, your speed of execution is everything.

The primary value of adopting MCP is simple: Stop building glue code and start building features.

By standardizing how your AI connects to external systems, you drastically reduce the engineering overhead required to launch new integrations. Instead of spending weeks figuring out the nuances of a third-party API and trying to force an LLM to output the correct JSON schema to call it, you build an MCP server once.

This means faster time-to-market, easier maintenance, and a scalable way to expand your product's ecosystem. It’s time to clean out the drawer of cables. Adopt the standard, and start building the future faster.

How to Use MCP in Lamatic.ai

At Lamatic.ai, we recognize that the future of AI is interconnected, not isolated. We are embracing the MCP standard to ensure that our users aren't just building powerful AI workflows, but workflows that can actually do things in the real world with minimal friction.

In Lamatic.ai, you can leverage MCP to instantly connect your generative AI flows to external systems. By using Lamatic as your AI orchestration layer, you can plug in existing MCP servers or build your own custom ones allowing your AI agents to seamlessly access databases, execute code, and manage third-party tools without writing complex, bespoke integration logic for every single step.

Add an MCP Server to Your Project

Navigate to Connections > MCP/Tools in Lamatic Studio and add a new server with these configurations:

Credential Name: Descriptive name for your MCP server

Host: Configure authentication and custom headers

Type: Select the protocol type (http or sse)

Headers: Configure authentication and custom headers

Use MCP in Your Flows

MCP integrates directly with the Generate Text Node. To add it to your flow:

Select Your Flow: Choose the flow where you need external data.

Add MCP Node: Insert the node into your flow.

Configure: Select your saved MCP server.

Choose Tools: Pick the specific resources or tools the AI should use.

Handle Responses: Map the output to the next step in your flow.

Explore the MCP Integration on Lamatic.ai

Ready to stop building "glue code" and start building the future? Check out our MCP Documentation to get started.

The Era of "Integration Debt" is Over

You didn't start a company to write API connectors; you started it to solve problems with AI. Every hour your team spends wrestling with bespoke "glue code" is an hour stolen from your core product.

MCP isn't just a technical protocol; it’s a declaration of independence from messy integrations. By combining the universal standard of MCP with the orchestration power of Lamatic.ai, you aren't just building faster—you’re building for a future where your AI can talk to anything, instantly. You get to stop being an "integration company" and go back to being an AI company.

Ready to plug in?

Don't be the startup still building proprietary bridges while the rest of the industry is moving toward a universal standard. Clean up your codebase, accelerate your roadmap, and let your AI finally interact with the world the way it was meant to.

Explore the MCP Integration on Lamatic.ai