Have you ever used ChatGPT, an AI agent, or a tool with agents and thought:

“Hmm… this isn’t quite what I expected.”

You’re not alone. And honestly, it’s probably not your fault.

The output you get from AI depends not just on your prompt, but also on a handful of under-the-hood settings known as parameters.

The good news?

You don’t have to be a developer to understand or use them.

This post is your friendly, non-coder guide to LLM parameters: what they mean, and how you can use them (even in no-code tools!) to get more useful, reliable, or creative results.

🧠 What Are “Parameters” in LLM?

Think of parameters as sliders or dials that control how the AI behaves.

They don’t change the AI model itself; they just change how it responds to you.

Imagine you’re a movie director:

You decide if the actor should be serious or funny (that’s a parameter).

Whether they give a 1-line answer or a monologue (another parameter).

Whether they improvise or stick to the script (yep, also a parameter).

A few simple tweaks can dramatically shift how helpful, creative, or clear the output is.

🎛️ The 5 AI Parameters That Actually Matter

Let’s break down the most important ones, without jargon, just real-world impact:

1. Temperature → Controls Creativity vs. Safety

Lower (0.1–0.4): Predictable, safe, to-the-point.

Higher (0.7–1): Creative, surprising, exploratory.

Use this when:

You want reliable, factual outputs? Set it low.

You want ideas, names, or concepts? Set it higher.

Example:

“Give me 5 startup names for a mood-tracking app.”

Try it with 0.2 vs. 0.9, totally different vibes.

2. Top-p (a.k.a. Nucleus Sampling) → How Wide the AI Can Roam

This one often gets confused or sloppily explained. Here’s the deal:

Top-p tells the model how broad a pool of responses it should consider.

Top-p = 1.0: Go wild, consider all possible answers.

Top-p = 0.5: Narrow it down, only consider the top 50% most likely responses.

Tip:

Top-p and temperature both affect randomness. Usually, tweak one or the other, not both at the same time. If changing temperature isn’t helping, then adjust top-p.

3. Max Tokens → How Long the Response Can Be

Think of this as a word (or really, chunk) count limit.

Short answer? Set max tokens to 50–100.

Long-form article? 1000+.

Getting incomplete/half-baked answers? Increase this value.

(Some tools hide this, but if the reply ends abruptly, this could be why.)

4. System Prompt → Set the AI’s Personality & Role

Not a slider, but just as powerful.

The system prompt tells the AI who it is and how to act.

Example system prompts:

“You are a sarcastic Gen Z friend helping someone write a tweet.”

“You are a helpful coach for busy founders, focused on productivity.”

You’ll see this in tools like Replit Agents, MindPal, or when building GPTs in ChatGPT itself.

💡 If your outputs feel bland or generic, change the system prompt. Be specific! Give the AI a role to play.

5. Presence & Frequency Penalty → Prevent Repetition

Ever have the AI keep repeating itself, like:

“You can do this. You can do this. You can do this.”

Here’s how to fix it:

Presence penalty: Encourages new ideas (mentions new concepts).

Frequency penalty: Discourages repeating the same words/phrases.

You might not see these in basic tools, but if the platform gives you “Advanced Options,” these are worth tweaking.

🛠 Where Can You Use These (No Coding Required)?

You don’t need to write code or use the OpenAI API to play with parameters.

Try them in:

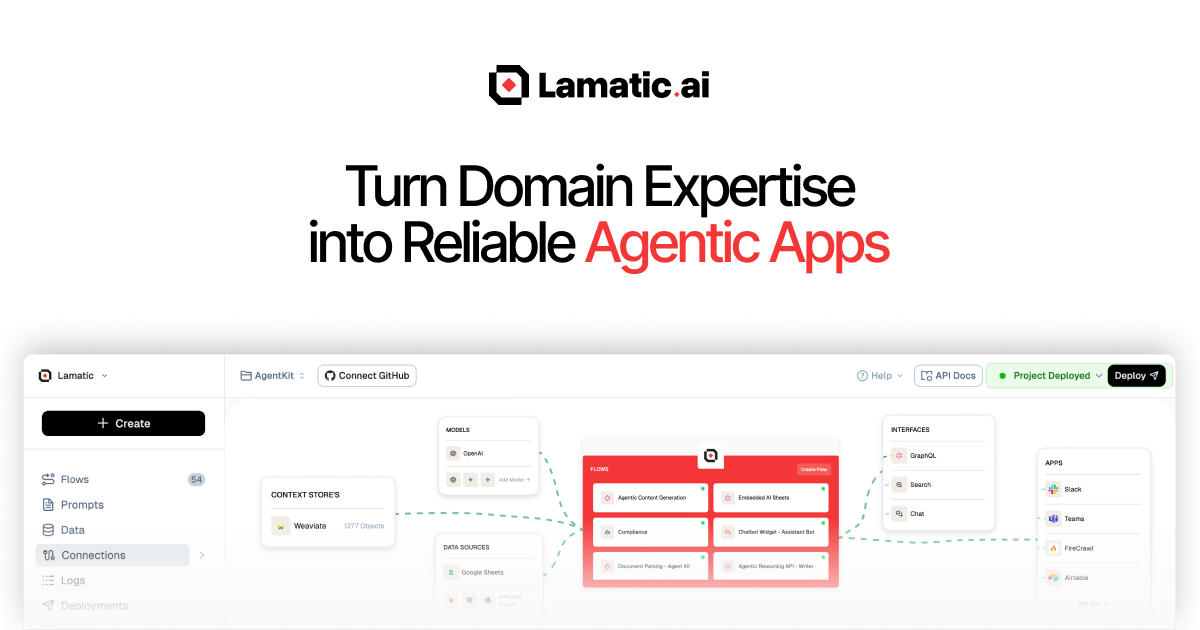

Lamatic Studio /OpenAI Playground: Easy web UI with sliders for each parameter.

Lamatic Model Configuration

Many AI tools: Look for “Advanced” or “Custom” options.

OpenAI Prompt Playground

✨ Real-World Use Cases: When Should You Tweak What?

Naming a product?

Increase temperature for original, surprising ideas.

Writing tweets or brand content?

Add a bold, funny, or on-brand system prompt.

Extracting structured data (emails, tags, etc)?

Lower the temperature, use a smaller max tokens for clean, reliable data.

AI keeps rambling or repeating?

Set a max token limit and increase the frequency penalty.

💬 Don’t Learn to Code—Learn to Steer

Once you understand these 5–6 settings, you stop feeling like:

“Ugh, ChatGPT is random and unreliable.” and start saying: “Okay cool, I know how to tune this!”

AI isn’t magic. It’s predictable when you know how to steer it.

🧪 TL;DR Parameter Cheat Sheet

Parameter | What it Controls | Quick Tip |

|---|---|---|

Temperature | How creative/unpredictable output is | Lower for facts, higher for ideas |

Top-p | Range of outputs sampled | Lower for focus, 1.0 for full variety |

Max Tokens | Response length | Short for summaries, long for articles |

System Prompt | AI’s tone and “character” | “Be a Gen Z productivity coach”, etc |

Presence Penalty | Explores new ideas | Higher for more novelty |

Frequency Penalty | Reduces repeated words/phrases | Use if it keeps repeating itself |

🧠 Final Thought: Prompting Isn’t Just Typing

Prompting isn’t just what you type, it’s how you configure the experience.

You don’t need an engineering degree.

You just need curiosity, and a willingness to tweak a few settings.

Next time a response feels off: don’t just rewrite the prompt, check the parameters, too. You’re closer to great AI output than you think.