Every technical team building AI agents faces the same question: Do we wire up APIs directly, adopt an open-source framework, or use a managed platform?

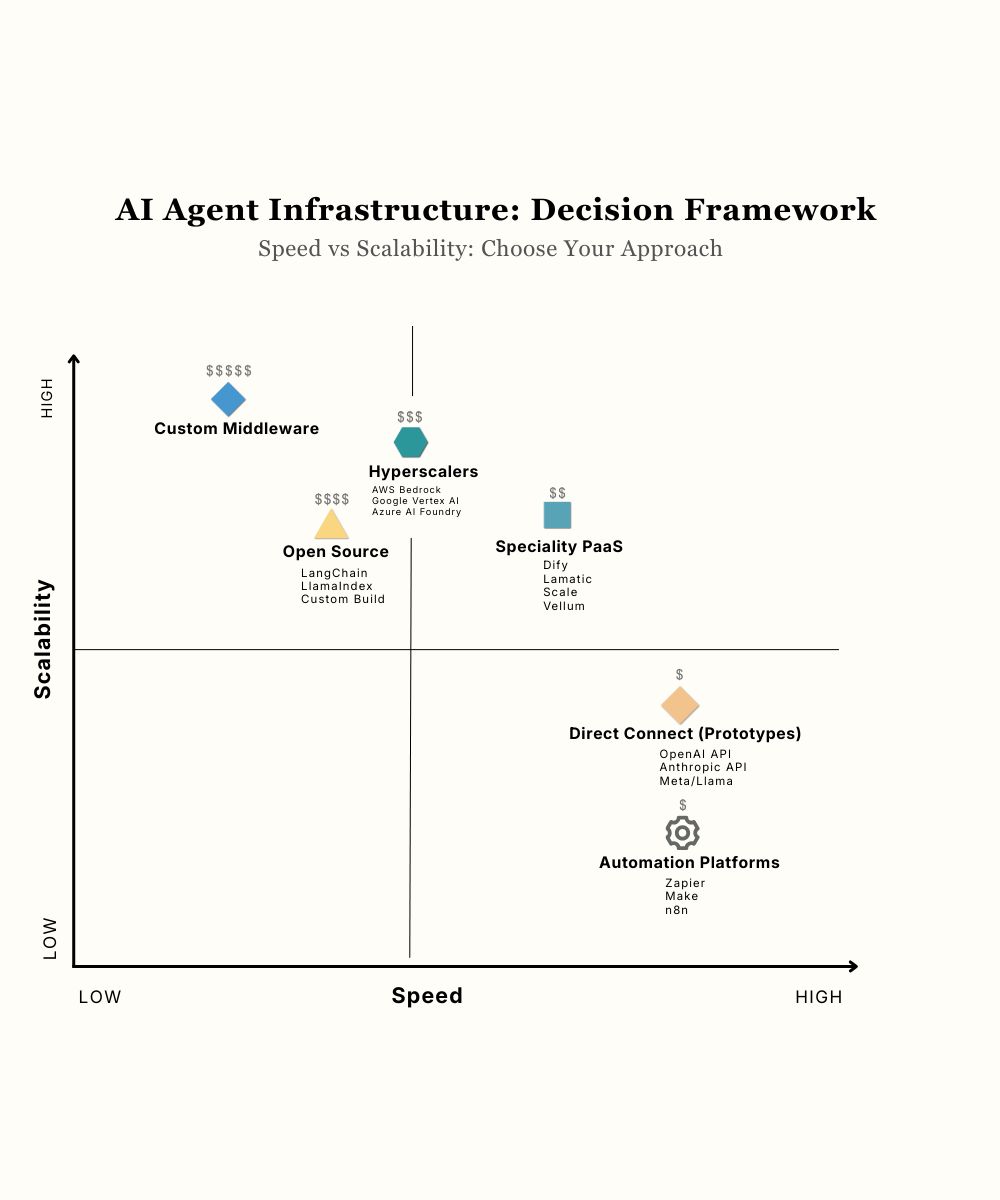

The answer depends on where you are - and where you're going. Here's a decision framework that maps 5 distinct architectural approaches across two dimensions: how fast you can ship (Speed) and how far you can go (Scalability).

The 2×2 Framework

X-Axis: Speed - How quickly you move from roadmap to production

Y-Axis: Scalability - How well your architecture adapts as requirements change

Both dimensions are desirable - there isn't such a thing as being too fast to market or too scalable for future (and perhaps unanticipated) needs. The challenge is in understanding the trade-offs as they relate to your use case so you know which approach best fits your situation.

Figure: AI Infrastructure Landscape—Solutions are positioned according to their speed-to-market (X-axis) and scalability (Y-axis) to illustrate the trade-off between speed and architectural extensibility.

Five Approaches to AI Infrastructure

0. Automation Platforms (No-Code/Low-Code)

Numbered "0" because while these tools are valuable for workflow automation, they aren't typically an option for teams building production AI applications.

Where: Lower-Right (Same Speed as Direct Connect, Low Scalability)

Zapier, Make, n8n - visual builders for creating triggered AI automations. Ideal for operational teams doing workflow automation. Also useful for prototyping agentic logic. Not recommended for building interactive or production-grade applications.

1. Direct Connect (Foundation Model APIs)

Where: Lower-Right (prototypes) → Upper-Left (custom middleware)

Wire directly to foundation model APIs from vendors like OpenAI, Anthropic, Meta, etc. It's fast for prototypes (hours to demo), but production reality hits hard: retry logic, cost tracking, fallback providers, rate limiting, and a lot more.

Direct Connect and Automation Platforms are positioned at the same speed (both fastest to start), but they serve fundamentally different use cases: Direct Connect is for building custom applications, while Automation Platforms are for no-code workflow bridges.

If you go all-in on custom middleware, you get maximum scalability but pay the price: 3-6 months of specialized engineering and $50K-100K to build it, plus 40-60 hours per month in admin and ongoing maintenance.

When Direct Connect makes sense: Quick prototypes and MVPs, or large enterprises with specialized use cases that can't be supported without custom infrastructure.

2. Specialty PaaS for AI Agents (Independent Platforms)

Where: Center-Right

Dify, Lamatic, Scale, Vellum - platforms that deliver AI infrastructure as a service. They offer the connectivity, orchestration, collaboration tools and execution infrastructure as a managed service so engineering can focus on the differentiating features of their application.

The trade-off: PaaS optimizes for speed and developer experience rather than maximum scalability. For agile product teams focused on iteration speed, this is the right call - you get most of the scalability without the drag of building and maintaining complex infrastructure.

Time to production: 1-4 weeks vs. 12-16 weeks for custom approaches.

Real example: Beehive Climate needed to introduce AI "very quickly... within a sprint."

"We wanted to build Beehive, not spend months building middleware - middleware that we'd then need to maintain."

Result: First version of any new feature built in "probably a week or two".

When a Managed PaaS makes sense: Small-to-mid teams, product-focused, need production infrastructure immediately.

3. Open Source Frameworks (Custom Build)

Where: Center-Left

LangChain and LlamaIndex provide a middle ground between building fully custom middleware and using managed platforms. These frameworks offer flexibility through customization, but come with dependencies, breaking changes, and maintenance burden.

Teams typically spend 40-60 hours per month on admin and maintenance. Production-ready implementation: 12-16 weeks and ~$50K in engineering costs.

Real example: Reveal Automation started with LangChain but migrated to Lamatic's managed platform to eliminate platform admin and accelerate their release cycle.

"The time and effort required to test new workflows in LangChain was huge."

Result: After testing Lamatic with their toughest use case, they fully replatformed:

"Moving from using 100 percent LangChain to using 100 percent Lamatic was an afternoon project … now we're releasing new AI features about 10 times faster."

When an Open Source Framework makes sense: Teams with ML/AI expertise where flexibility is more important than speed and where the particular open source framework's abstractions are a good match for their specific use case.

4. Hyperscaler Platforms (Cloud Provider AI)

Where: Upper-Center

AWS Bedrock, Google Vertex AI, Azure AI Foundry - enterprise-grade scale with cloud-native integrations. Faster than open source but still takes weeks to set up.

The trade-off: strong ecosystem integration vs. vendor lock-in. High switching costs usually translate into higher operational costs.

When it makes sense: Already deeply invested in AWS/Azure/GCP with compliance tied to that specific cloud.

Quick Decision Guide

Your timeline:

Prototype this week → Direct Connect / Automation Platform

Production in 2-4 weeks → Specialty PaaS / Hyperscaler

3-6 months to build → Open Source / Custom (if you have custom needs and a capable team)

Your team:

1-5 engineers, product-focused → Specialty PaaS / Hyperscaler

10+ engineers with infra team → Open Source / Custom

Non-technical team → Automation Platform

Your requirements:

Testing multiple models, fast iteration → Specialty PaaS

Infrastructure IS your product → Custom Build

Enterprise has long-term commitment to a platform → Hyperscaler

Time-to-Market Comparison

Approach | Time to Market | Rationale for Choosing |

Direct Connect | 1-2 weeks | Fastest way to prototype |

Specialty PaaS | 1-4 weeks | Fastest path to production |

Hyperscaler | 6-10 weeks | Compliance considerations |

Open Source | 10-20 weeks | Unique requirements (faster than full custom) |

Full Custom | 12-24 weeks | Unique requirements (unsupported above) |

The Bottom Line

There is no universal "right answer" - it's about understanding the trade-offs and how they apply to your situation.

Ask yourself: Are you optimizing for speed, control, scale, flexibility, focus, or something else?

Your job isn't to build the most sophisticated stack. It's to make sure your infrastructure keeps up with your ambition without stealing your focus.

The best infrastructure is the kind you forget about - the kind that gets out of your way so you can focus on what actually matters: solving real problems for real users.

For most, time-to-market is a key consideration. Be sure to validate your rationale before choosing an approach that is slower to market.