TL;DR — Diagnostic framework, not a prescription

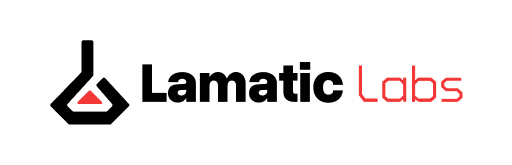

Direct API: Fast start; ceiling emerges as requirements evolve

Custom Middleware: Control and customization, at the cost of ongoing maintenance

Managed Platform: Predictable operations; trade control for velocity

Decide by team structure, scale trajectory, compliance needs, and plumbing vs product focus

The Framework at a Glance

The Three Paths:

Direct API Integration — Minimal wiring for maximum simplicity

Custom Middleware — In-house abstraction layers

Managed Middleware — Adopt existing platform solutions

This framework summarizes observed patterns across production deployments to clarify options and typical outcomes for teams building AI-powered products (Lamatic analysis, 2024–2025).

Where Does This Framework Come From?

Lamatic's founding story mirrors a common industry arc: starting by integrating directly with LLMs, then building middleware as flows become more complex—chaining steps, handling exceptions, and meeting higher reliability demands. Over time, this middleware absorbs engineering that could be devoted to customer features - becoming a product in its own right. This “infrastructure tax” emerges not just with rising usage but as the complexity and mission-criticality of AI flows increase—a theme echoed in interviews with dozens of experienced teams.

Research into how teams navigate AI infrastructure reveals three distinct approaches, each with predictable characteristics and evolution patterns.

What Is AI Middleware?

The infrastructure layer between an application and the required resources which handles the routing, reliability, observability, cost-control, compliance, and ongoing optimization demands that emerge as applications mature.

Operational responsibilities include request routing/model selection, failover/fallbacks, retries/backoff, rate limiting, and connection management (provider production guides).

Business responsibilities include cost tracking, usage analytics, multi-tenant isolation, budget controls, compliance audit trails (SOC2 requirements are extensive), and collaboration features such as role-based permissions and automated workflow to eliminate error-prone handoffs and manual work.

Technical responsibilities include caching strategies, context management, embeddings and vector operations, prompt/version testing, and continuous testing/evaluation of quality, cost, and speed to support rapid optimization.

Why Do AI Systems Require Different Infrastructure?

Compounding Complexity

Single requests touch many steps—validation, context retrieval, embedding generation, model calls, fallbacks, parsing, caching—compunding system complexity (GitHub discussions document common patterns).

Production Orchestration Example

# Orchestrated request with rate limits, cache, audit, fallbacks (illustrative)

async def handle_ai_request(prompt, user_ctx):

if not await rate_limiter.check("openai", user_ctx.id):

return await call_provider("anthropic", prompt, user_ctx)

audit = AuditLogger(user_ctx)

key = cache_key(prompt, user_ctx)

if cached := await cache.get(key):

audit.log("cache_hit")

return cached

for provider in ["openai", "anthropic", "cohere"]:

try:

resp = await providers[provider].complete(

prompt, user_ctx, timeout=30

)

audit.log("success", provider)

await cache.set(key, resp)

return resp

except (RateLimit, Timeout) as e:

audit.log("fallback", provider, str(e))

raise ServiceUnavailable("all_providers_failed")The snippet above is a small example of the type of plumbing that bloats code bases and draws focus away from the product itself.

Path Analysis: Detailed Examination

Path 1 — Direct API Integration

Optimizing for discovery and initial velocity

Characteristics: Minimal integration, direct dependencies, limited abstractions, fast deployment.

Typical evolution (patterns across implementations):

Initial period: Rapid prototyping

Growth period: Begin adding platform features to the product roadmap

Scaling period: Patch solutions accumulate

Decision period: Architecture choice becomes necessary

Common conditions: Works when usage and business requirements are stable, single provider suffices, downtime tolerance exists, compliance requirements minimal. Teams may begin building custom middleware in response to production issues without considering strategic options.

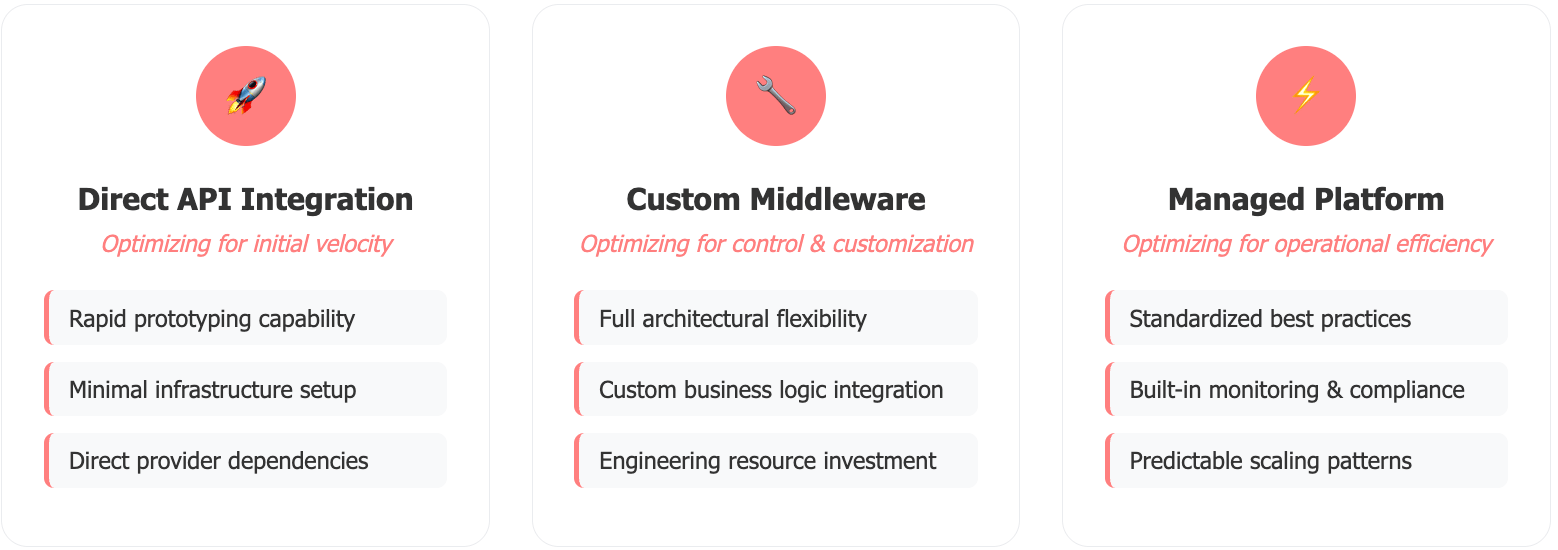

Path 2 — Custom Middleware (DIY)

Optimizing for unique infrastructure requirements and control

"Our middleware started simple, but grew complex; eventually, it was more complex than our product." (Reveal case study, one team's experience.)

Scope vs Investment

Source note: Patterns from Lamatic engagements; experiences vary.

"Each client needed similar infrastructure. We were rebuilding the same solutions repeatedly." (Raza Noorani testimony, one software development agency’s experience.)

Path 3 — Managed Middleware

Optimizing for operational efficiency

"For us, DIY meant months on SOC2 and maintenance; using a managed platform accelerated timelines and allowed us to focus on features." (Beehive interview, one team's experience.)

Implementation traits: Configuration over construction, standardized patterns, vendor relationship management, predictable operations.

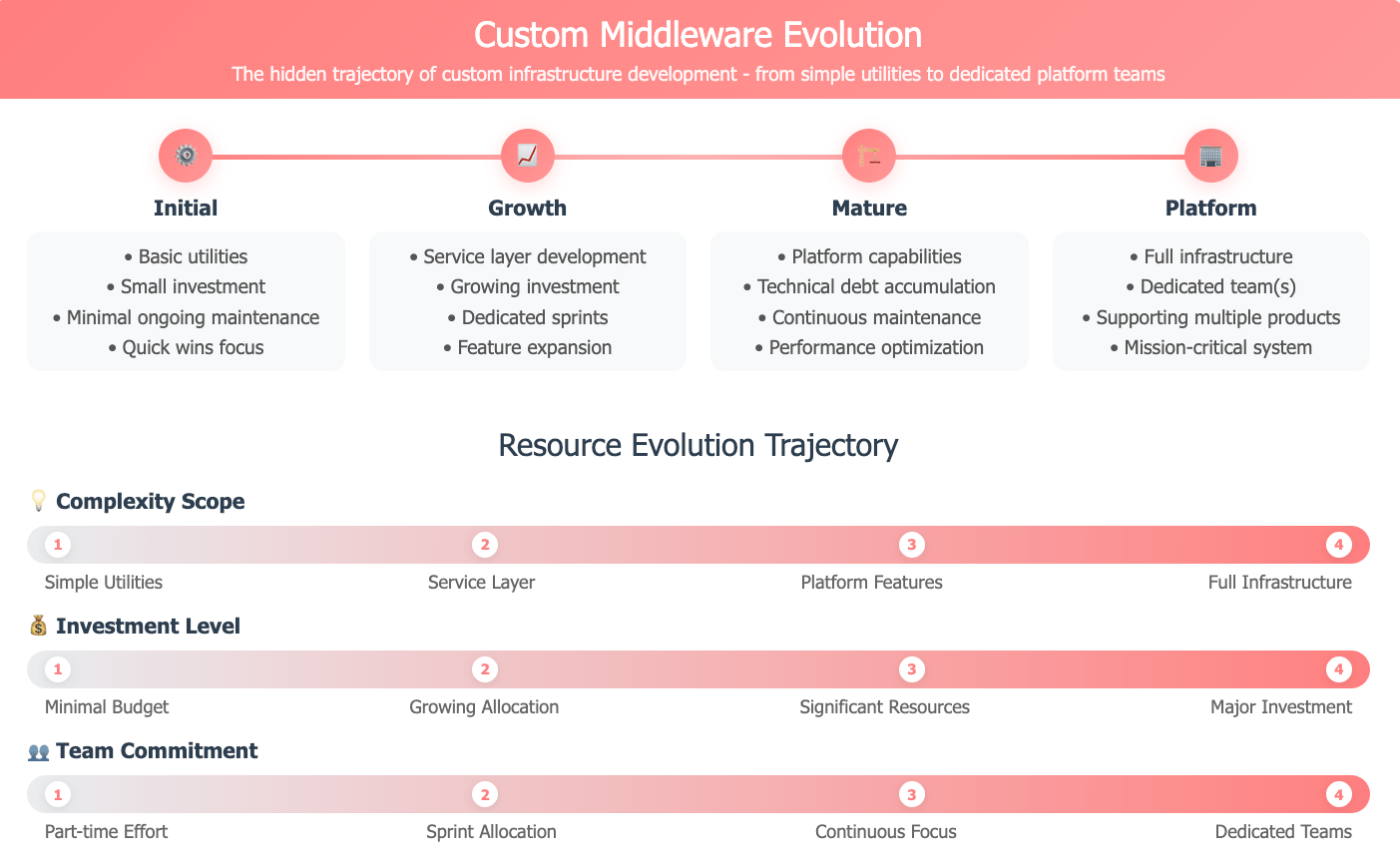

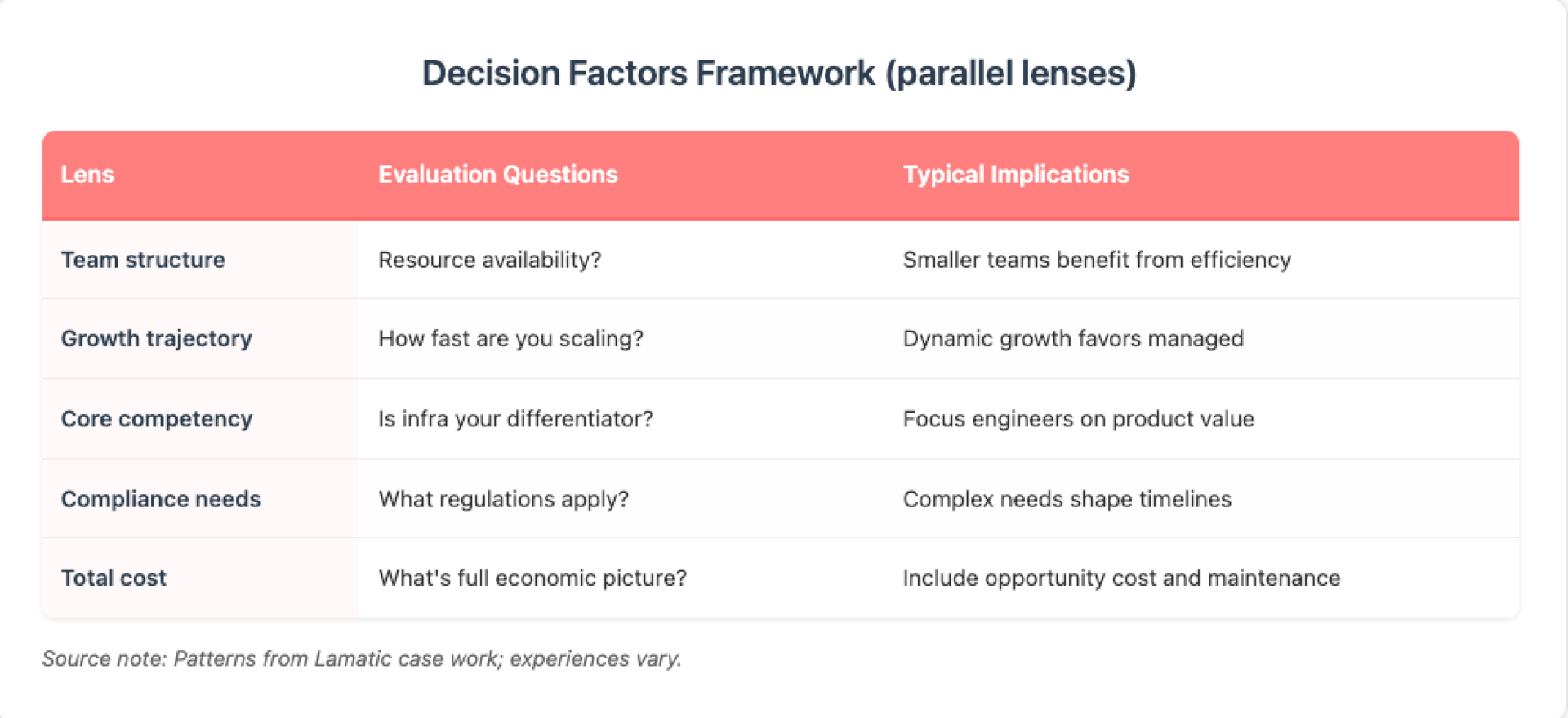

How Do Teams Evaluate Their Options?

Scale Considerations Matrix

Source note: Lamatic observations + public best-practice docs.

Decision Factors Framework (parallel lenses)

Source note: Patterns from Lamatic case work; experiences vary.

When Does Each Path Typically Fit?

Direct API Integration fits when:

Building prototypes and POCs

Creating internal tools with known patterns

Exploring early product-market fit

Running cost-sensitive experiments

Custom Middleware fits when:

Infrastructure provides competitive moat

Unique technical requirements exist

Complete control requirements dominate

R&D and experimentation drive value

Managed Platforms fits when:

Product differentiation takes priority

Rapid scaling requirements exist

Compliance deadlines approach

Engineering resources are constrained

What Hidden Costs Emerge?

Each path carries its own infrastructure tax:

Direct Integration considerations: Timeouts and exceptions impact end-users, evolving requirements may be unsupportable, limited observability and cost control.

Custom Middleware considerations: Infrastructure sprints displacing product feature work, continuous maintenance burden, slowing release velocity, infrastructure hiring needs, effective lock-in due to engineering opportunity cost.

Managed Platform considerations: Vendor relationship management, platform learning investment, customization limitations, subscription costs.

See provider production guidance and Lamatic case studies for detailed patterns.

How Do Teams Navigate Path Changes?

Teams that migrate typically run in parallel, shift traffic gradually, and monitor reliability and costs throughout. Exact timelines vary by context.

The process is achievable but requires planning. For examples, checklists and live support, access the migration resources referenced in our documentation.

Frequently Asked Questions

How do teams know when to change paths?

Triggers often include reaching operational limits, compliance requirements emerging, or engineering resources becoming constrained. The decision often crystallizes around specific incidents.

What about lock-in considerations?

Custom middleware creates internal technical debt; managed adds vendor relationships. Both are forms of dependency. Choose the constraint that aligns with your priorities and risk tolerance.

How do compliance timelines typically differ?

Custom middleware requires building the compliance layer from scratch; platforms with existing certifications can accelerate significantly (provider guides; Beehive interview).

Is changing paths mid-stream realistic?

Yes—many teams successfully migrate. Clear objectives, realistic timelines, and staged cutovers matter most.

Should “unique" requirements automatically mean Custom Middleware?

First validate whether uniqueness lies in infrastructure needs or product differentiation. Many "unique" infrastructure requirements prove common in practice.

About This Framework

Developed by Chuck Whiteman (Lamatic CEO) with technical contributions from Aman Sharma (CTO). Based on analysis of production deployments, interviews including Reveal and Beehive, and community reports (2024–2025).

Last Updated: September 2025

Meta Description: AI infrastructure framework analyzing how teams choose between direct API integration, custom middleware, and managed platforms—with patterns and trade-offs.