⛳︎ In this Edition

📹 Exclusive Webinar on LLM this friday

🏖️ AI's "Sand Castle Problem"

This week I got unsolicited calls from 3 different tech CEOs. Now I get a lot of cold calls, but normally they’re from someone trying to sell me, not from a CEO hoping to buy. What’s most surprising about these calls is that we don’t even think of the CEO as our target customer. We target CTOs. After all, the CTO owns the problem we solve as well as the tech stack where our solution lives.

Yet here I am - fielding unsolicited inbound calls from CEOs who are all interested in solving the Exact. Same. Problem. This week, I have follow-up calls with all 3 of these CEOs. And each is bringing the CTO.

What is this problem that’s so acute that CEOs are dragging CTOs to meetings to discuss the tech stack?

I’ll call it the “Sand Castle Problem” 🏖️ because two of these CEOs (and others I’ve spoken with) independently told me they feel like the LLM-powered applications they’re running are built on “shifting sand” and lack a stable foundation.

Newton’s First Law of Motion tells us that a body in motion will remain in motion unless it is acted upon by a force.

A corollary that many have adopted is that a computer system that works will continue working unless you change something.

Unfortunately, this corollary doesn’t hold true for systems built on top of LLMs which are inherently non-deterministic and dynamic.

This is the reason CEOs are suddenly taking an interest. The traditional method for shielding customers from computer problems (i.e., testing changes before releasing them to Production) doesn’t work with these systems. Not only do problems reveal themselves with real customers, they happen at any time - rather than on your standard release cycle when all hands are ready and waiting to put out any fires.

How are GenAI systems different?

LLMs are non-deterministic and constantly evolving - as described above, we’re all accustomed to “Newtonian” computer systems. With GenAI systems, things can break even when you change nothing.

The half-life of GenAI techniques & technology is very short - this fact means that GenAI applications are constantly being refactored to remain competitive. Which causes the traditional sort of “Newtonian” breakage.

Data = Fuel (its quality is crucial) - without high quality data, GenAI applications will simply hallucinate and serve up answers that sound right, but aren’t. Integrating these systems with a steady flow of properly structured, high quality data is an ongoing and evolving task.

LLMs are not equally good at all tasks - and applications often perform a variety of tasks. When you integrate multiple models into a single application the complexity of the stack and the potential for variability compounds.

Experienced talent is scarce, expensive and difficult to retain - as if the work above weren’t enough, it’s also a lot of work to find the people that can do it.

Problems with LLM apps among others

How to solve the “Sand Castle Problem”?

Output Monitoring - what distinguishes Generative AI from really good search is its ability to “create”. This is the positive side of the “non-deterministic coin”. When it comes to creating new things, today’s GenAI is far from perfect and systematically identifying these imperfections and correcting them is the key to optimizing both current and future output quality.

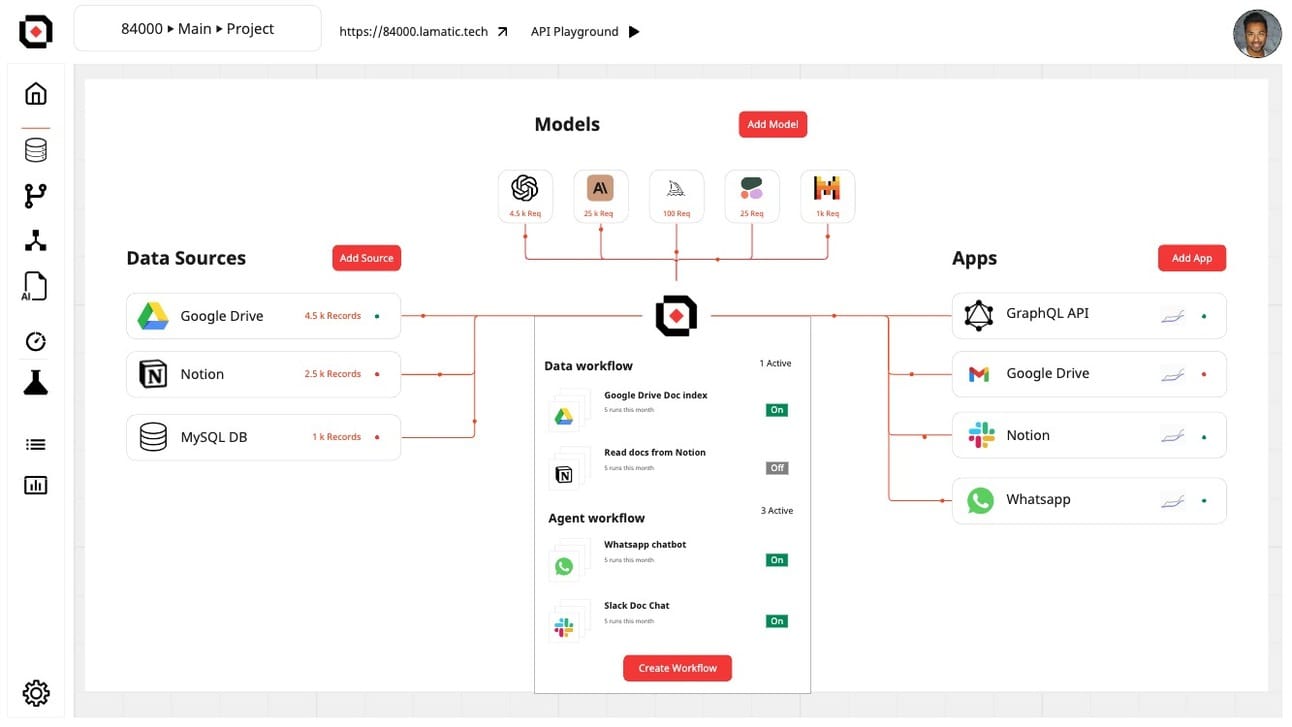

Modularity - there are a lot of components in any GenAI application. A modular framework that allows you to snap in new components as the old ones become obsolete is an important way to limit tech debt while ensuring that your application remains useful.

Input Monitoring - Keep in check what your users are doing with the system to ensure both the safety and integrity of these systems. Make sure there are sufficient guardrails in place to handle such incidents.

Continual Experimentation - last year 3 new public LLMs were released each month on average. This pace is not slowing which increases the importance of monitoring developments and snapping in new technologies when warranted.

Managed Platform - the complexity and pace of advancement within this new tech stack layer is only accelerating. I believe it will be increasingly rare that building and maintaining a bespoke GenAI platform will be both a core competence and competitive differentiator for companies.