2025 was a watershed year for AI. It was the moment when AI transitioned from “impressive demos” to something far more consequential: Embedded Infrastructure, revenue-critical workflows, and genuine business dependency. The headlines screamed of breakthrough models like OpenAI’s GPT-5, Google’s Gemini 3, Meta’s 400B to 2T parameter Llama 4: but beneath these model announcements lay a quieter, more important story. Teams stopped asking “What can AI do?” and started asking “How do we reliably build with it?”

But this turning point comes with a warning. Alongside real progress, the AI boom has also created heavy speculation. Many companies are valued far beyond what their fundamentals justify, and much of the market is filled with shallow products built on the same underlying technology. Venture capital investment in AI has surged to extreme levels reaching $192 billion to over $200 billion by late 2025 even though the number of deals has dropped sharply. This is a familiar pattern: strong, real innovation at the center, surrounded by a growing and unsustainable layer of hype.

As 2025 closes and organizations gear up for 2026, the year ahead will test something more important than model IQ:

It will show which companies can truly build reliable AI systems that scale and keep improving over time. In that world, infrastructure and platform tools (the behind-the-scenes but essential foundation) will be what sets companies apart.

The State of AI in 2025 — Models, Infrastructure, and Shifting Priorities

The End of Model-Centric Thinking

For the past eighteen months, the dominant narrative in AI was about raw model capability. “Which LLM is smartest?” dominated conversations. In 2025, that obsession finally began to fade.

OpenAI’s GPT-5, released in August 2025, claimed “state-of-the-art performance across coding, math, writing, health, visual perception, and more.” It introduced a novel dual-model approach, pairing a smart reasoning engine with an efficient fast model, optimized for different problem types.

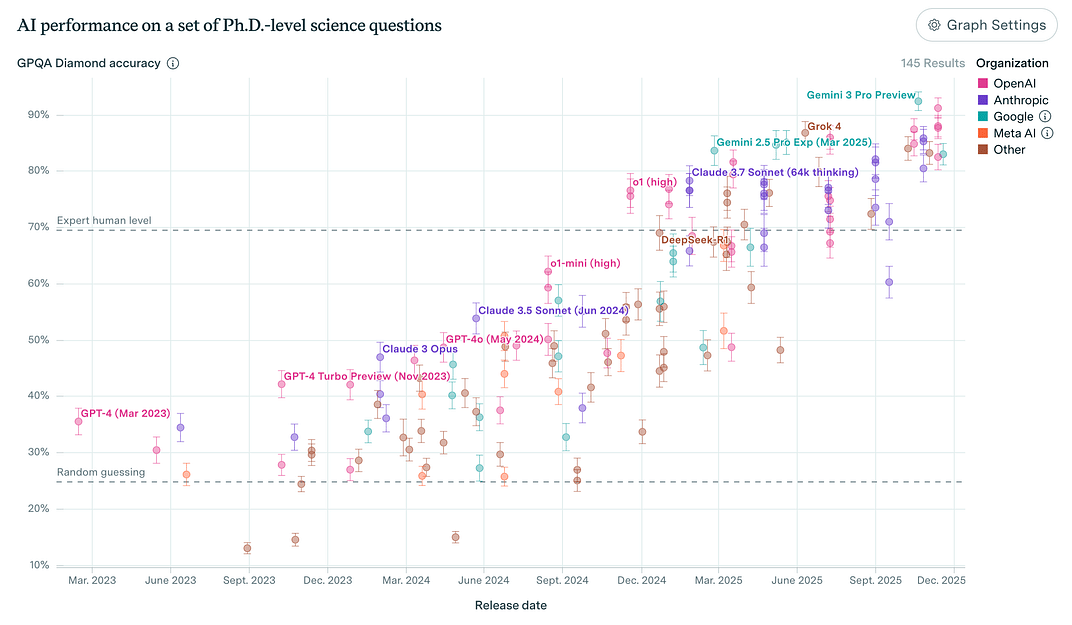

Google DeepMind’s Gemini 3, deployed in late 2025, set new records on standardized benchmarks — 91.9% on the GPQA Diamond benchmark, 1501 Elo on LMArena Leaderboard — asserting claims to being the “most intelligent model” with PhD-level reasoning capabilities.

These advances were real and substantial. But they mattered far less in 2025 than what teams actually did with them.

The priorities that emerged in 2025 reflected a maturation in thinking:

Reliability over raw intelligence: Teams discovered that a model scoring 90% on a benchmark meant little if it hallucinated 5% of the time in production, or if error rates compounded through a multi-step workflow. Hallucination rates, determinism, and traceability became first-class concerns. Organizations stopped chasing the “smartest” model and started chasing models they could depend on.

Latency and cost as hard constraints: Once teams moved beyond proof-of-concept stages, response time and predictable unit economics became table stakes. A system that works but costs $10 per request doesn’t scale. A model that takes 30 seconds to respond doesn’t fit into a live customer interaction. The “best” model became the one that balanced capability with real-world production constraints.

Modality as a feature, not a headline: Vision, audio, and code understanding capabilities improved substantially in 2025, but the magic wore off. Teams began treating multimodal inputs and outputs as natural extensions of interface breadth, not as breakthroughs worthy of press releases. A vision model that could parse receipts with 95% accuracy felt like a tool, not a marvel.

Most critically — AI as a reasoning engine inside a larger system, not the product itself: By 2025, the most successful deployments shared a pattern. The model wasn’t the customer-facing interface or the business differentiator. Rather, it was a component: a powerful one, but a component orchestrated within larger workflows involving data systems, business logic, error handling, and human judgment. This shift moved the center of gravity away from “which model should we use?” and toward “how do we architect systems that use models effectively?”

The Rise of Agents and the Discovery That Glue is Hard

One of the most consequential narratives of 2025 was the maturing of agents and agentic AI. Nearly every department like HR, finance, product design, customer service now claims to have an AI agent or copilot. But the successful ones share a pattern, and the unsuccessful ones revealed a hard truth.

Successful agents in 2025 were:

Deeply embedded in one workflow, not generic assistants trying to do everything.

Tightly integrated with domain data, tools, and permissions, not disconnected from the systems they needed to influence.

Measurable, with clear success metrics (tickets resolved, time saved, revenue unlocked) rather than vanity metrics like engagement or prompt diversity.

But moving from “demo agents” (like book me a flight) to production agents (reconcile invoices, trigger payouts, update CRM, escalate exceptions, maintain audit trails) proved to be mostly a systems problem, not a model problem. Organizations discovered that orchestration, state management, error handling, observability, and governance were where the real complexity lived and not in the LLM itself.

A demo agent needs a good prompt. A production agent needs -

State management (what has been done, what remains, what’s in progress?)

Deterministic tool calling and fallback logic (what if the API fails?)

Observability (how do we know what the agent did and why?)

Error recovery (if step 3 failed, can we retry, or do we escalate?)

Auditability (for compliance, for debugging, for customer support)

Human oversight (where do humans need to review or approve decisions?)

This realization sent a clear signal: the infrastructure layer had become as important as the model. Teams that tried to build production agents by stringing together prompt engineering and API calls burned months on systems problems that should have been solved once, by a platform.

The Breakthroughs that shaped 2025

In 2025, AI transcended hype-driven benchmarks to deliver practical, enterprise-grade advancements that redefined deployment and integration. While raw model capabilities continued to evolve, the year’s true impact lay in reliable reasoning structures, mature retrieval systems, multimodal inputs as standard, next-generation LLMs with architectural innovations, hardware accelerations, and widespread industry adoption.

These developments shifted AI from experimental prototypes to core operational infrastructure, enabling teams to build robust applications through better tools, efficiency, and human-like perception.

Next-Generation LLMs and Reasoning Maturity

LLMs in 2025 emphasized architectural sophistication over sheer scale, with convergence across leading providers narrowing performance gaps on reasoning tasks.

OpenAI’s GPT-5 pioneered a dual-model architecture: a reasoning-optimized model for complex, multi-step problems paired with a lightweight variant for speed and cost efficiency, underscoring that targeted training methodologies outperformed brute-force parameter growth.

Google’s Gemini 3 achieved record scores — 91.9% on GPQA Diamond benchmarks and 1501 Elo on LMSYS Arena — demonstrating parity between closed and open ecosystems in structured reasoning.

Anthropic’s Claude 4 family introduced “active context” with over 2 million token windows as baseline, enabling persistent, months-long project continuity without information decay.

Reasoning and structured tool use became more reliable: Parallel to these leaps, reasoning reliability solidified through structured tool use rather than unstructured chain-of-thought prompting. Models excelled in sequential API calls, database queries, and service integrations when guided by JSON schemas and domain-specific planners, transforming tool calling from gimmick to automation cornerstone.

This maturity allowed pipelines to handle real-world workflows dependably, where free-form reasoning faltered under complexity.

Retrieval-Augmented Generation (RAG) as an Information Discipline

RAG evolved from rudimentary vector search into a sophisticated engineering practice, proving that system design outweighed retriever algorithms in performance.

Source: Google Gemini

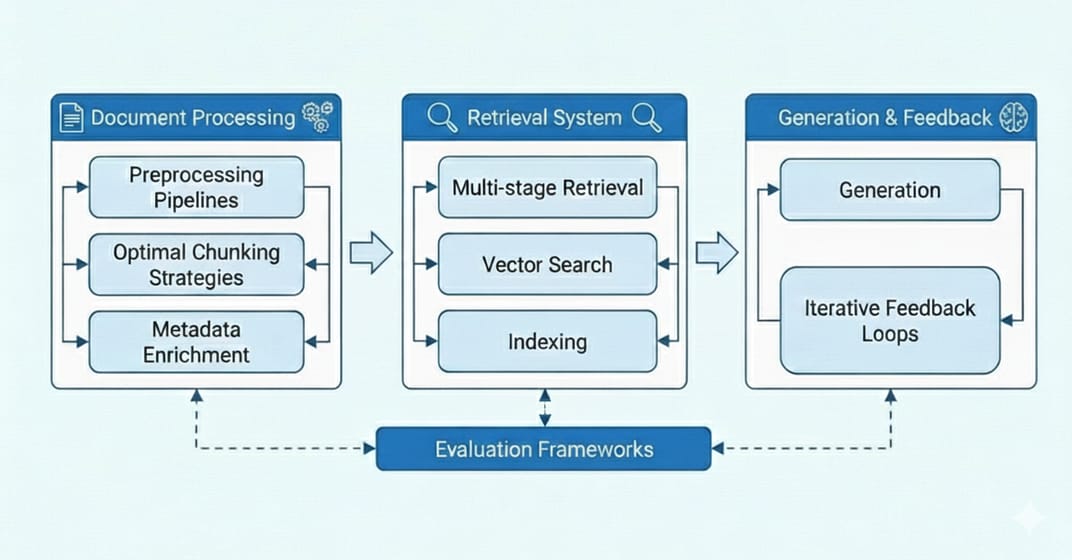

Advanced implementations incorporated preprocessing pipelines, optimal chunking strategies, metadata enrichment, multi-stage retrieval, and iterative feedback loops tailored to domain needs.

Evaluation frameworks emphasized domain-specific metrics, revealing that high-quality RAG hinged on organizational intelligence — how documents were indexed and queried — rather than embedding tech alone. This shift made RAG a scalable backbone for knowledge-intensive applications, from legal research to customer support.

Multimodality: From Novelty to Default Perception

Multimodal capabilities matured to enterprise reliability, expanding input modalities beyond text to mirror human sensory processing.

Vision models parsed UIs, diagrams, PDFs, receipts, and screenshots with sufficient accuracy for business automation, elevating document understanding to an expected feature.

Speech recognition enabled credible voice interfaces for support and internal tools, while video analysis gained utility in moderation, asset tracking, and analytics.

This “perceptual” expansion unlocked B2B operations, allowing systems to ingest visual and auditory data natively rather than converting everything to prompts.

Hardware and Infrastructure Enablers

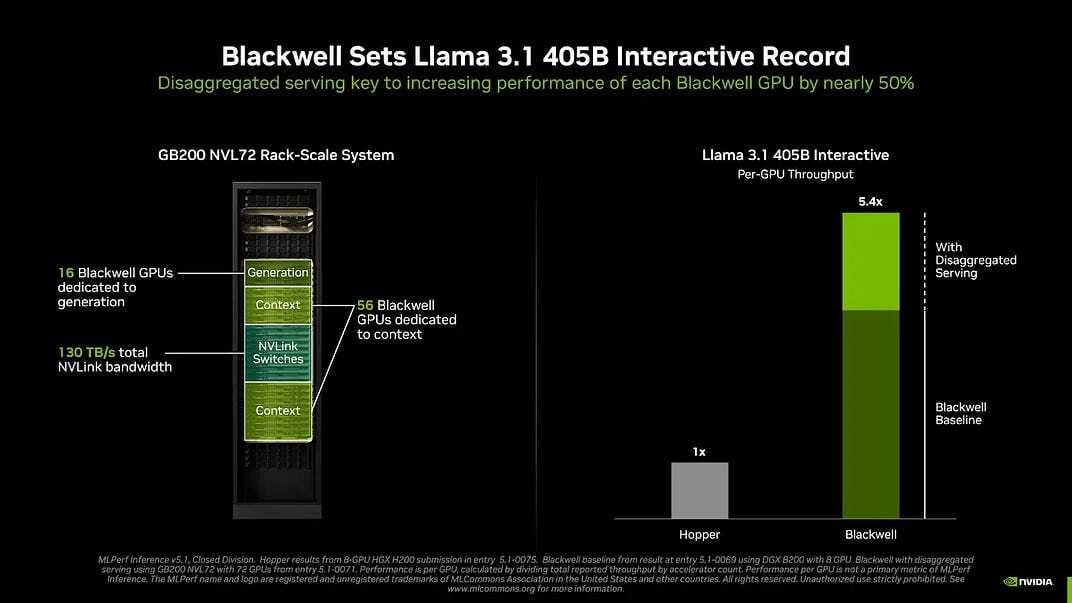

Deployment feasibility accelerated with NVIDIA’s Blackwell B300 GPUs, which doubled Hopper-era throughput and offered up to 288 GB HBM3e memory per chip.

These advances supported massive model training and low-latency inference at scale, extending to cloud and edge environments. Such infrastructure democratized high-performance AI, making real-time applications practical beyond research labs.

Industry Adoption and Real-World Deployment

By 2025, AI had moved beyond demos into real products:

Healthcare systems deployed AI for note-taking, scheduling, and image diagnostics.

Businesses launched AI-driven tools for legal research, HR operations, marketing, and customer service.

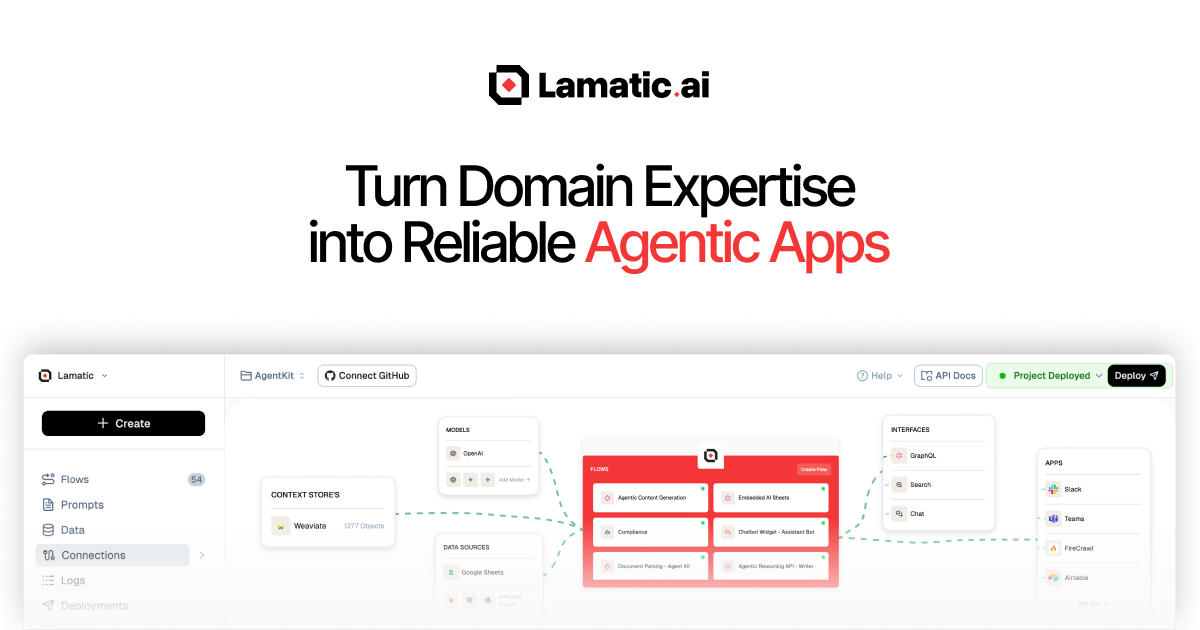

Developer platforms emerged to simplify AI project development and deployment like Lamatic.ai

These breakthroughs collectively marked 2025’s pivot: AI’s value now derived from integration reliability, cost efficiency, and multimodal utility, positioning it as indispensable infrastructure rather than isolated capability.

Predictions and Priorities for 2026

Enterprise Scale: From Pilots to Programs

One of the most significant shifts expected in 2026 is the move from scattered pilots to enterprise-wide AI programs. PwC predicts that in 2026, businesses will adopt centralized “AI studios” with top-down leadership — not scattered, siloed experiments.

This shift has important implications. Instead of dozens of teams running isolated proof-of-concepts, we’ll see organizations identify the highest-impact workflows, invest in them properly, and use success stories to justify expansion. This is a move from “experimentation” to “operationalization.”

For vendors and platforms, this means that 2026 will favor tools that help enterprises:

Design workflows systematically

Measure impact rigorously

Deploy reliably at scale

Iterate safely when models or requirements change

Rise of AI Agents (Done Well)

Autonomous agents are expected to play a bigger role in 2026, but with a key caveat: only those built on solid orchestration foundations.

Consulting firms predict that AI agents will increasingly automate complex, high-value processes in finance (invoice matching, reconciliation), HR (scheduling, policy lookup), product design (ideation, prototyping), and operations. But translating “the agent can do this in a demo” to “the agent does this reliably in production” requires robust platforms that handle state, error recovery, observability, and human oversight.

This is exactly where platforms like us (Lamatic) position ourself: providing the infrastructure to orchestrate agents that do real work, not just chat.

Responsible, Safe, and Regulated AI

As AI spreads into mission-critical workflows, regulation and governance will become central. The EU AI Act took effect in 2025; in 2026, it will impose transparency rules on generative models and strict requirements for high-risk systems. Similar moves are expected globally.

For organizations, this means:

Investment in safety, bias mitigation, and auditability

Documentation of training data, model provenance, and decision processes

Testing and evaluation frameworks tied to regulatory requirements

Human oversight and escalation procedures

This regulatory tightening will favor organizations and platforms that built governance into their DNA, rather than bolting it on afterward.

Model Specialization and Efficiency

As the frontier-model arms race continues, 2026 will see increased focus on specialized models and efficiency gains:

Specialized models for coding, science, robotics, and domain-specific tasks will improve, offering better performance and lower latency than general-purpose models.

Efficiency gains through smaller, faster models and on-device AI will reduce costs and latency.

Open-source models will continue improving, with new releases aiming for GPT-5 parity or better.

Chip competition will increase, with new AI accelerators and cloud offerings lowering the infrastructure barrier.

This democratization of capability will shift value up the stack:

From “which model should we use?” to “how do we orchestrate and integrate models effectively?”

One of the most important trends expected in 2026 is the move from hype to measured ROI. Only a few early adopters have seen transformative gains so far. In 2026, many firms will try to learn from them by:

Measuring ROI carefully

Focusing on use cases with clear payoff

Building internal expertise (“AI generalist” teams)

Investing in platforms that enable fast, safe iteration

We’ll likely hear more about “AI-enabled” productivity boosts in finance, supply chain, customer support, and operations — not because these areas are new, but because high-performing companies will move beyond proofs-of-concept into real products that move the needle on meaningful metrics.

The Role of Infrastructure Platforms — Why Lamatic Matters

The “Unsexy” Truth About Production AI

As 2025 demonstrated, the gap between “an AI demo that works” and “an AI system in production” isn’t a modeling problem — it’s an infrastructure problem. Models provide the intelligence, but infrastructure provides the reliability, observability, and trust.

At Lamatic, we built our platform on a simple premise: your engineers should be solving business problems, not rebuilding the same orchestration plumbing that every other company is struggling with. We solve the predictable, recurring headaches that kill momentum:

Orchestration: How do you chain multiple models, tools, and data systems into a coherent workflow?

Safety: How do you test and evaluate changes without breaking what’s already live?

Observability: When (not if) the system fails, can you see exactly why in seconds?

Agility: How do you hot-swap a model or update a prompt without downtime?

Governance: Who changed what, and is there an audit trail?

These aren’t “sexy” problems. But they are universal. Teams that solve them once — at the platform level — ship 10x faster than teams solving them ad-hoc for every single workflow.

What We Shipped in Lamatic 3.0 (and Why)

In late 2025, we released Lamatic 3.0. This wasn’t just a feature update; it was a response to the pain points we saw developers facing daily.

The New Flow Builder: We moved from code-heavy configuration to a visual canvas. Why? Because spaghetti code is where AI workflows go to die. Our drag-and-drop interface allows you to connect AI components like building blocks, making workflows maintainable and accessible to the whole team, not just the Python experts.

Prompt IDE & Engineering: Prompt engineering isn’t a one-time task; it’s a constant loop. We built a dedicated workspace with an intelligent assistant to help rewrite, optimize, and refine prompts instantly.

Embedded AI Assistants: We put AI inside the IDE. Whether you need real-time guidance on what node to add next or help writing a transformation script, our Flow and Code Assistants are there to lower the barrier to entry and prevent careless errors.

Skeptics might call this marketing. But as engineers, we look at the math: if these tools give you even a 5x speedup in moving from idea to production, that is the difference between leading the market and chasing it.

Our Thesis for 2026

We are betting the company on a specific vision of the future:

The next wave of AI value will not come from better models, but from better orchestration, evaluation, and governance of the models we already have.

This thesis rests on four engineering realities:

Marginal Utility of Models: Models are already incredibly capable. The bottleneck is no longer “is the model smart enough,” but “can we integrate it reliably?”

Integration is the moat: Most competitors have access to the same LLMs. The winner is the company that integrates them tightly with proprietary data and business logic.

Change is the only constant: New models arrive quarterly. Regulations tighten annually. If your stack is rigid, you lose. If you can swap components easily, you win.

Governance is a feature: Audit trails, human-in-the-loop, and bias monitoring are no longer optional compliance checkboxes — they are requirements for deployment.

A Note to Builders

If you are a founder, engineer, or technical leader, my advice is simple: Treat infrastructure as a strategic priority.

The companies that win in 2026 won’t be the ones with the most ML PhDs. They will be the ones that treat AI as an operational discipline. They invest in platforms that make design, testing, and deployment boringly reliable.

As a founding engineer at Lamatic, my focus is helping your team move from “promising prototype” to “production-grade workflow” without getting stuck in the mud of custom tooling. If your goal is to ship fast and sleep well at night, Lamatic is built to be your execution surface.

Conclusion: From Demos to Discipline

2025 will be remembered as the year AI transitioned from “What’s possible?” to “How do we do this reliably?” The breakthroughs in models, multimodality, and reasoning capability were real and substantial. But the more important story was organizational: teams learning to design, deploy, and iterate on AI systems in production.

The bubble concerns are valid but incomplete. Yes, parts of the AI market are overheated and will correct. But the technology is advancing genuinely, and the organizational capability to deploy it is improving. The question is not whether AI will reshape business, but which organizations will execute competently enough to capture value before capital retreats.

2026 will test this execution capability. It will separate the companies that built discipline from those that rode hype. It will favor platforms and approaches that make orchestration, evaluation, and iteration fast and safe. It will punish those that treat AI as a one-off project rather than an evolving practice.

For organizations building in this landscape, the message is clear: invest in the plumbing. The intelligent assistants, orchestration platforms, observability tools, and governance frameworks that sound boring and technical are actually where leverage lives. Lamatic embodies this philosophy — reducing friction, embedding best practices, and making it easier to turn AI capability into business value.

As 2025 closes and 2026 begins, the most important work in AI is not happening in model architecture papers or benchmark tables. It’s happening in the unglamorous but essential work of building systems that last, adapt, and deliver measurable value. That’s the game in 2026. And the winners will be those who started playing it in 2025.

Thanks for reading! 🙌

We appreciate you taking the time to explore how AI shifted from hype to infrastructure in 2025, and what that means for your organization in 2026.

If you found value in this analysis, don’t stop here.

The real work happens when you start building.